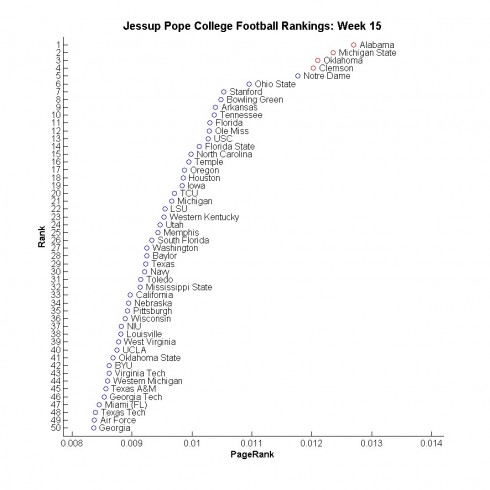

Alabama, Michigan State, Oklahoma, and Clemson are our top 4 teams, respectively, for week 15. Notre Dame falls to 5th, Ohio State is bumped to 6th, and Stanford rose to No. 7 on the strength of their strong win over USC (#13). All the major moves were by teams that played this weekend and most of their moves were upwards. Strangely, Texas (#29) has two wins over top 25 college football playoff (CFP) teams – there are very few teams out there with that distinction!

Prediction quality measurement

Out of the 87 games involving teams ranked in the CFP top 25, the CFP continues to correctly predict the winner (adjusted for home field advantage) 67% of the time. We can actually compute the probability of being that successful out of 87 games if you are purely guessing: it happens less than .001 of the time, or less than 1 in 1000 attempts. We look at something called a binomial distribution to obtain this “p value.” Our model continues to outperform the CFP system, correctly predicting the outcome 74% of the time for these same games. As of this weekend there have been only 14 games in which our system makes a different prediction than the CFP rankings and ours has “won” this competition 10 times. If our two systems were equal in predictive ability, then the probability of this happening would be less than .029, or about once in 35 tries.

A bug – or a feature?

George Box famously said “all models are wrong, but some are useful.” Similarly, we know that our system is flawed and this process of producing rankings each week has brought some of those flaws to the forefront. One of the goals of successful modeling is to seek out flaws so as to eliminate or minimize them. If we are trying to build better models, what good would it do to hide the flaws?

The primary weakness we have found in our system is that teams which play more games are ranked higher than those that play fewer games. In fact, nearly every team that played this weekend, win or lose, moved up in our rankings due to this fact. This clarifies why Clemson edged back into the top 4 and Notre Dame fell out – all three teams that moved ahead of them played this weekend. (On a sidenote, I am genuinely bummed that we have the exact same top four – though in a different order – as the CFP.) This flaw might also explain why we expected a large Michigan State win over Iowa and a narrow Stanford win over USC when in fact they won narrowly and largely, respectively. This issue is definitely one we will address in our college football offseason. Interestingly, the fact that our model is working better than its primary competitor, the CFP, suggests that it might be a feature instead of a bug!

In later posts, we will present some additional comparative analysis of our rankings and, further, list our predicted bowl winners and win margins.

Previous JP rankings posts

If you are interested in learning more about our rankings, feel free to read some of previous posts, linked below.

Post 1: Week 10: Introduction of JP ranking system and initial rankings (week 10)

Post 2: Week 11: Rankings and additional information on how the system works

Post 4: Week 12: Addendum – Tears on my slide rule, or, What happened to dear old Texas A&M

Post 6: Week 14: Rankings and a measure of comparative predictive performance